In an update today, YouTube is claiming to have made significant progress in removing harmful video on its platform following a June update to its content policy which prohibited supremacist and other hateful content. The company says it has this quarter removed over 100,000 videos and terminated over 17,000 channels for hate speech — a 5x increase over Q1. It also removed nearly double the number of comments to over 500 million, in part due to an increase in hate speech removals.

The company, however, is haphazardly attempting to draw a line between what’s considered hateful content and what’s considered free speech.

This has resulted in what the U.S. Anti-Defamation League, in a recent report, referred to as a “significant number” of channels that disseminate anti-Semitic and white supremacist content being left online, following the June 2019 changes to the content policy.

YouTube CEO Susan Wojcicki soon thereafter took to the YouTube Creator blog to defend the company’s position on the matter, arguing for the value that comes from having an open platform.

“A commitment to openness is not easy. It sometimes means leaving up content that is outside the mainstream, controversial or even offensive,” she wrote. “But I believe that hearing a broad range of perspectives ultimately makes us a stronger and more informed society, even if we disagree with some of those views.”

Among the videos the ADL had listed were those that featured anti-Semitic content, anti-LGBTQ messages, those that denied the Holocaust, featured white supremacist content and more. Five of the channels it cited had, combined, more than 81 million views.

YouTube still seems to be unsure of where it stands on this sort of content. While arguably these videos would be considered hate speech, much seems to be left online. YouTube also flip-flopped last week when it removed then quickly reinstated the channels of two Europe-based, far-right YouTube creators who espouse white nationalist views.

Beyond the hate speech removals, YouTube also spoke today of the methodology it uses to flag content for review.

It will often use hashes (digital fingerprints) to automatically catch copies of known prohibited content ahead of it being made public. This is a common way platforms remove child sexual abuse images and terrorist recruitment videos. However, this is not a new practice and its mention in today’s report could be to deflect attention from the hateful content and issues around that.

In 2017, YouTube said it also increased its use of machine learning to help it find similar content to those that have already been removed, even before the videos are viewed. This is effective for fighting spam and adult content, YouTube says. In some cases, this also can help to flag hate speech, but machines don’t understand context, so human review is still required to make the nuanced decisions.

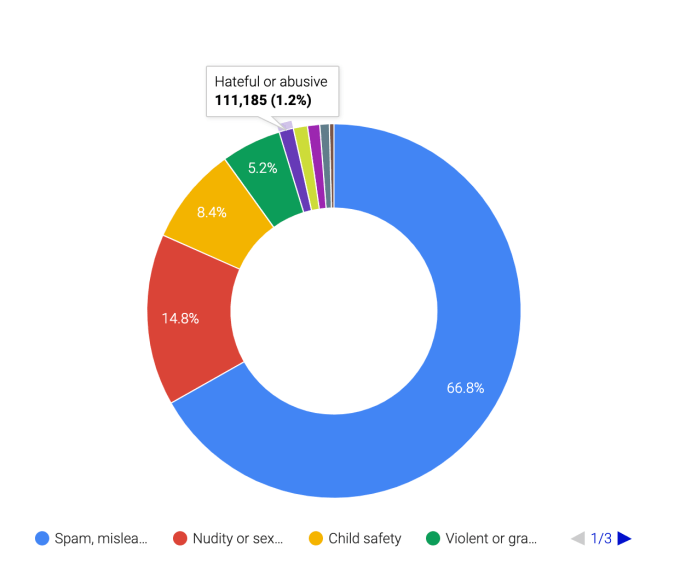

Fighting spam is fairly routine these days, as it accounts for the majority of the removals — in Q2, nearly 67% of the videos removed were spam or scams.

Moe than 87% of the 9 million totals videos removed in Q2 were removed by automated systems, YouTube said. An upgrade to spam detection systems in the quarter led to a more than 50% increase in channels shut down for spam violations, it also noted.

The company said that more than 80% of the auto-flagged videos were removed without a single view in Q2. And it confirmed that across all of Google, there are over 10,000 people tasked with detecting, reviewing and removing content that violates its guidelines.

Again, this over 80% figure largely speaks to YouTube’s success in using automated systems to remove spam and porn.

Going forward, the company says it will soon release a further update to its harassment policy, first announced in April, that aims to prevent creator-on-creator harassment — as seen recently with the headline-grabbing YouTube creator feuds and the rise of “tea” channels.

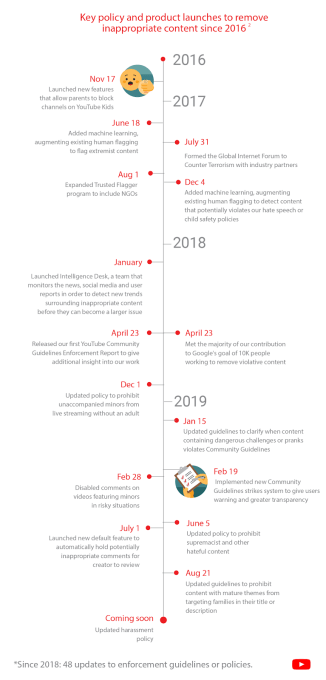

YouTube additionally shared a timeline of its content policy milestones and related product launches.

The update from YouTube comes at a critical time for the company, just ahead of a reported $200 million settlement with the FTC over alleged violations of child privacy laws. The fine serves as a stark reminder that, for years now, the viewers of these hate speech-filled videos haven’t only been adults interested in researching extremist content or engaging in debate, but also millions of children who today turn to YouTube for information about their world.