Apple has reportedly acquired U.K. special effects studio IKinema, a startup that may be useful in Apple’s quest to bolster its mobile devices with AR special effects and in its more far-flung attempts to enter the AR/VR headset market.

The company issued its standard confirmation for the deal to TechCrunch “Apple buys smaller companies from time to time, and we generally don’t discuss our purpose or plans.” The news was first reported by FT after rumors were floated by MacRumors. .

IKenema has used motion-tracking work to live-animate the bodily movements of digital characters, but the team has also stockpiled this information to create realistic models of movements of digital characters in digital environments, specifically in the context of games and virtual reality titles.

These models were highlighted in the startup’s RunTime product that integrated into game engines like Epic Games’ Unreal Engine. RunTime was powering avatar interactions in experiences like The Void’s “Star Wars: Secrets of the Empire,” a virtual reality experience at Disney resorts, as well as in works by studios like Capcom Linden Lab, Microsoft Studios, Nvidia, Respawn and Square Enix.

RunTime being used in Impulse Gears’ PSVR game, Farpoint

The company’s Orion product allowed motion capture with lower-cost input, essentially syncing limited input like head and hand movement with motion models that could allow for a hybrid solution that still looked realistic. The technology was being used for visualizations by teams at NASA, Tencent and others.

What does Apple want with this company?

There are a handful of spots that this tech could prove helpful, the most obvious of which would be bringing special AR effects to the iOS camera, juxtaposing the spatial data that the camera can gather from a real-world space with digital AR models. This could theoretically allow something like an AR figure to walk up your stairs or sit on a chair. The issue iKenema doesn’t solve in these scenarios is computer vision segmentation to be able to tell what surface is a table versus a floor versus a couch cushion, but enabling digital models to interact with these spaces is a big advance.

Where else?

Well, a little bit more of a reach for Apple would be using this tech in the context of a VR or AR avatar system. And although iKenema worked a lot with motion capture, they did so with the explicit purpose of designing models for digital humans to interact with digital environments in real time. Their solution was already being used by virtual reality developers to give VR gamers a way to visualize how their own bodies moved in VR given limited inputs.

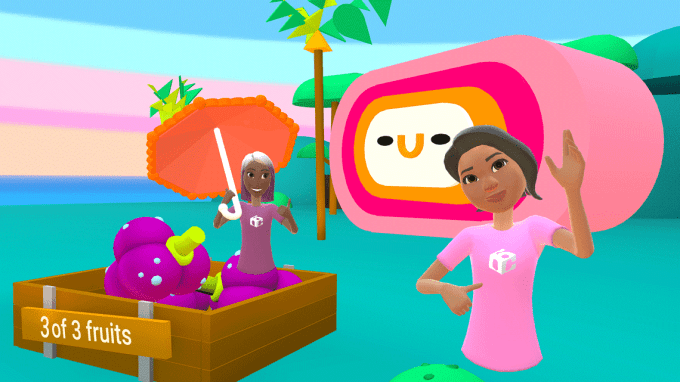

Facebook Horizon’s legless avatars

Virtual reality systems typically only know the location of your head and hands, given trackers are on the controllers and headset, but IKinema’s solution allowed developers to make the rest of users’ in-game bodies look more natural inside games. This is a pretty difficult challenge, and it’s the reason plenty of VR titles have made avatar systems that are missing legs, necks, arms and shoulders, because everything looks awful if the movements are off.

Apple’s computer vision needs are only heightening as they bowl forward on AR and VR devices while also aiming to bolster the camera on the iPhone as a point of differentiation from Google and Samsung devices.