A real check to Facebook CEO Mark Zuckerberg’s control is finally coming in the form of a 11- to 40-member Oversight Board that will review appeals to its policy decisions like content takedowns and make recommendations for changes. Today Facebook released the charter establishing the theoretically independent Oversight Board, with Zuckerberg explaining that when it takes a stance, “The board’s decision will be binding, even if I or anyone at Facebook disagrees with it.”

Slated to be staffed up with members this year who will be paid by a Facebook established trust (the biggest update to its January draft charter), the Oversight Board will begin judging cases in the first half of 2020. Given Zuckerberg’s overwhelming voting control of the company, and the fact that its board of directors contains many loyalists like COO Sheryl Sandberg and investor Peter Thiel who he’s made very rich, the Oversight Board could ensure the CEO doesn’t always have the final say in how Facebook works.

But in some ways, the committee could serve to shield Zuckerberg and Facebook from scrutiny and regulation much to their advantage. The Oversight Board could remove total culpability for policy blunders around censorship or political bias from Facebook’s executives. It also might serve as a talking point towards the FTC and other regulators investigating it for potential anti-trust violations and other malpractice, as the company could claim the Oversight Board means it’s not completely free to pursue profit over what’s fair for society.

Finally, there remain serious concerns about how the Oversight Board is selected and the wiggle room the charter provides Facebook. Most glaringly, Facebook itself will choose the initial members and then work with them to select the rest of the board, and thereby could avoid adding overly incendiary figures. And it maintains that “Facebook will support the board to the extent that requests are technically and operationally feasible and consistent with a reasonable allocation of Facebook’s resources”, giving it the the right to decide if it should apply the precedent of Oversight Board verdicts to similar cases or broadly implement its policy guidance.

How The Oversight Board Works

When a user disagrees with how Facebook enforces its policies, and with the result of an appeal to Facebook’s internal moderation team, they can request an appeal to the oversight board. Examples of potential cases include someone disagreeing with Facebook’s refusal to deem a piece of content as unacceptable hate speech or bullying, its choice to designate a Page as promoting terrorism and remove it, or the company’s decision to leave problematic content such as nudity up because its newsworthy. Facebook can also directly ask the Oversight Board to review policy decisions or specific cases, especially urgent ones with real-world consequences.

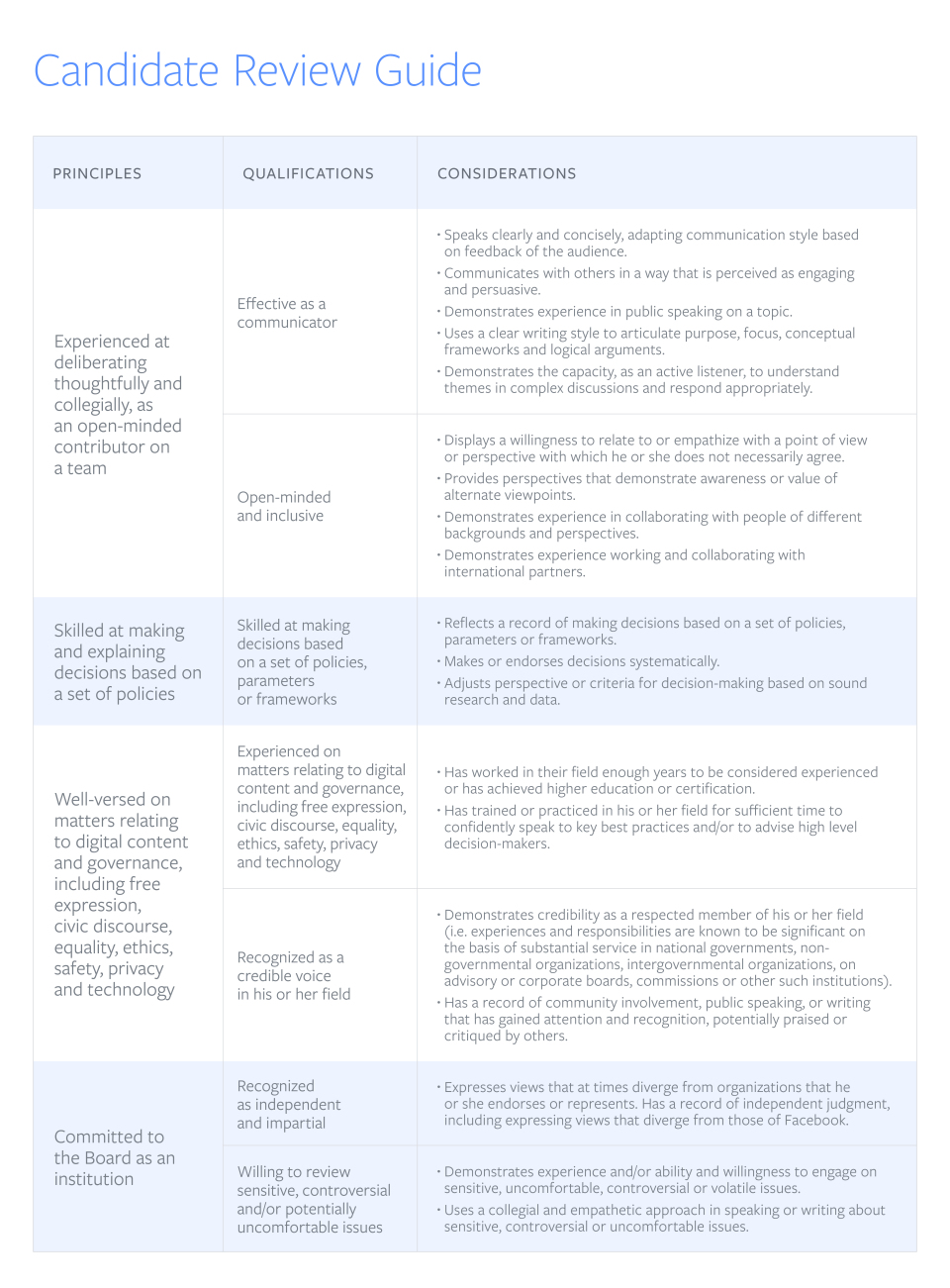

The board will include a minimum of 11 members but Facebook is aiming for 40. They’ll serve three year terms and a maximum of three terms each as a part-time job, with appointments staggered so there isn’t a full change-over at any time. Facebook is looking for members with a broad range of knowledge, competencies, and expertise who lack conflicts of interest. They’re meant to be “experienced at deliberating thoughtfully and collegially”, “skilled at making and explaining decisions based on a set of policies”, “well-versed on matters relating to digital content and governance”, and “independent and impartial”.

Facebook will appoint a set of trustees that will work with it to select initial co-chairs for the board, who will then assist with sourcing, vetting, interviewing, and orienting new members. The goal is “broad diversity of geographic, gender, political, social and religious representation”. The trust, funded by Facebook, will set members’ compensation rate in the near future and oversee term renewals.

What Cases Get Reviewed

The board will choose which cases to review based on their significance and difficulty. They’re loking for issues that are severe, large-scale and important for public discourse, while raising difficult questions about Facebook’s policy or enforcement that is disputed, uncertain, or represents tension or trade-offs between Facebook’s recently codified values of authenticity, safety, privacy, and dignity. The board will then create a sub-panel of members to review a specific case.

The board will be able to question that request that Facebook provide information necessary to rule on the case with a mind to not violating user privacy. They’ll interpret Facebook’s Community Standards and policies and then decide whether Facebook should remove or restore a piece of content and whether it should change how that content was designated.

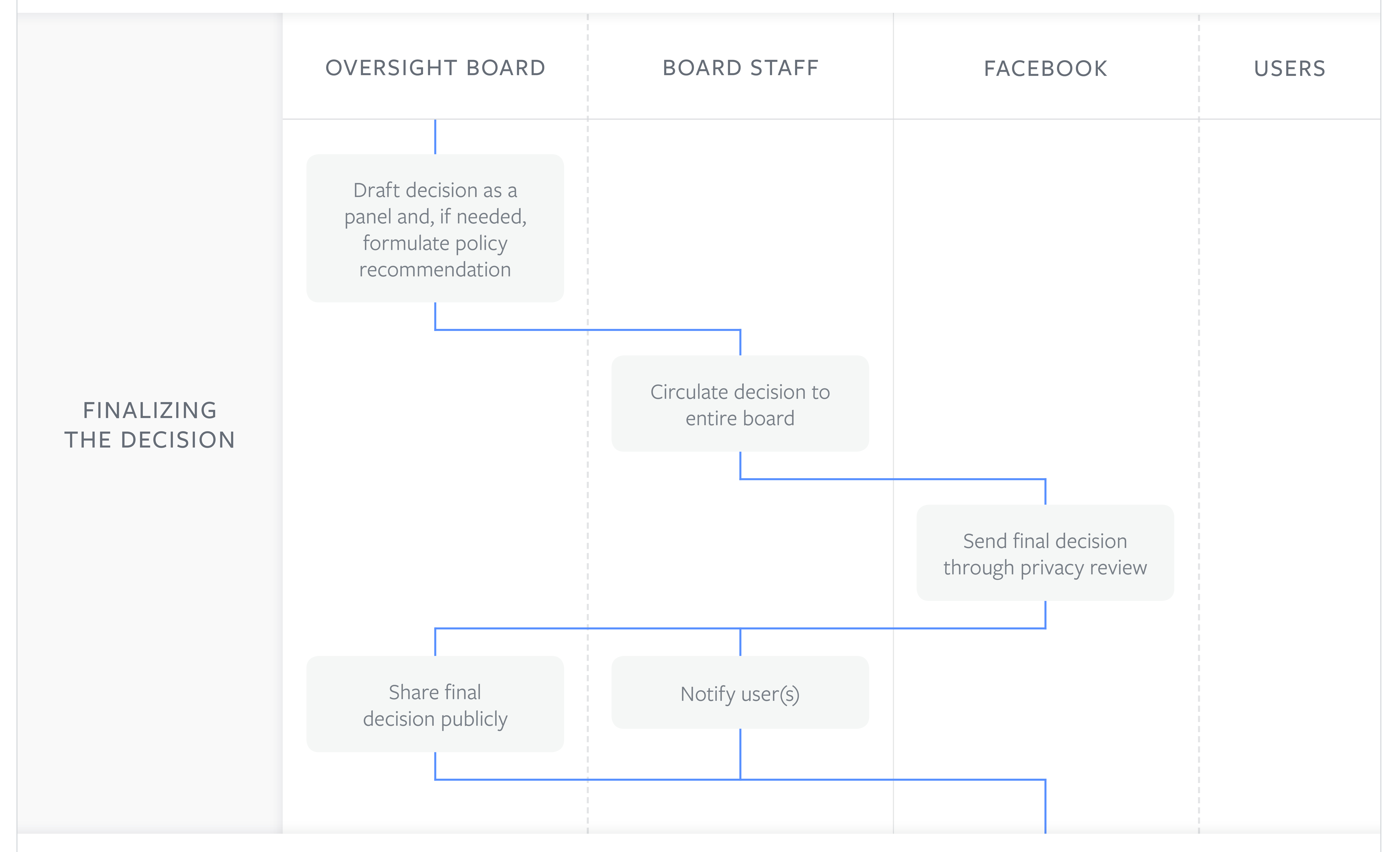

Once a panel makes a draft decision, it’s circulated to the full board who can recommend a new panel review it if a majority take issue with the verdict. Once they’ve gone through a privacy review to protect the identities of those involved with the case, the decisions will be made public. Those decisions will be archived in a database, and are meant to act as precedent for future decisions.